In Oracle database system, a “schema” account is actually a user and this user will be associated with a dedicated tablespace where database objects will be stored in. Having said that…there is a SQL command in Oracle called “create schema” but it actually doesn’t create the schema account (user)….it creates the objects and permissions associated with it.

Let us me first demonstrate how to create a schema account:

Will first create a tablespace and will call it “TS_HR” in pluggable database FREEPDB1:

CREATE BIGFILE TABLESPACE “TS_HR” DATAFILE

‘/opt/oracle/oradata/FREE/FREEPDB1/HRD01.dbf’ SIZE 20M

AUTOEXTEND ON NEXT 2M MAXSIZE 4000M

LOGGING ONLINE PERMANENT BLOCKSIZE 8192

EXTENT MANAGEMENT LOCAL AUTOALLOCATE DEFAULT

NOCOMPRESS SEGMENT SPACE MANAGEMENT AUTO;

Then, I will create a database user (schema) with the name HR as follows with default tablespace TS_HR (execute the below SQL commands):

CREATE USER HR identified by hr

DEFAULT TABLESPACE “TS_HR”

TEMPORARY TABLESPACE “TEMP”;

grant create session to HR;

grant resource to HR;

alter user hr quota unlimited on ts_hr;

alter user hr default role all;

Technically we are done….a schema account called “HR” is created in database system.

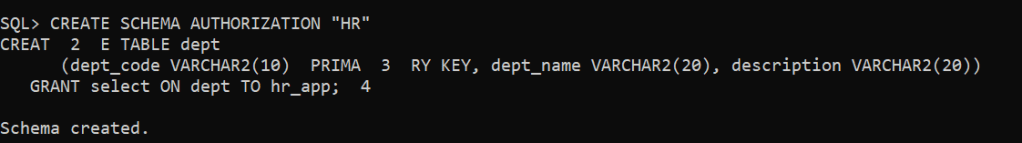

Now, how to use create schema SQL command….let me deonstrate:

Connect to the pluggable database as the schema account HR:

sqlplus “hr/hr”@FREEPDB1

SQL> CREATE SCHEMA AUTHORIZATION “HR”

CREATE TABLE dept

(dept_code VARCHAR2(10) PRIMARY KEY, dept_name VARCHAR2(20), description VARCHAR2(20))

GRANT select ON dept TO hr_app;

Schema created.

Database table called “dept” created under HR schema and granted permission to pre-exisiting HR_APP account as shown above.

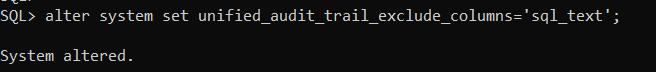

Important remark: oracle introduced “schema only” account concept which will prevent the account/user from being directly authenticated and can be accessed through proxy setup.

SQL command is: alter user hr no authentication;