I will first create the tablespace and vector user in pluggable database FREEDPB1:

SQL> create bigfile tablespace TBS_VECTOR datafile ‘/opt/oracle/oradata/FREE/FREEPDB1/VECTOR.dbf’ size 256M autoextend on maxsize 1G;

SQL> create user vector_user identified by “vectorai”

default tablespace TBS_VECTOR temporary tablespace TEMP

quota unlimited on TBS_VECTOR;

SQL> GRANT create mining model TO vector_user;

SQL> grant DB_DEVELOPER_ROLE to vector_user;

As SYS user run the following SQL queries against FREEPDB1 pluggable database:

SQL> CREATE OR REPLACE DIRECTORY dm_dump as ‘/opt/oracle/admin/FREE/dpdump/’;

SQL> GRANT READ, WRITE ON DIRECTORY dm_dump TO vector_user;

You can download the ONNX file from here:

sqlplus vector_user/vectorai@FREEPDB1

EXECUTE DBMS_VECTOR.LOAD_ONNX_MODEL(‘DM_DUMP’,’all-MiniLM-L6-v2.onnx’,’doc_model’)

PL/SQL procedure successfully completed.

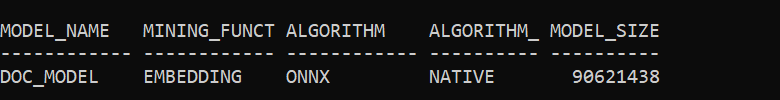

To verify model is loaded as vector_user execute the following query:

col model_name format a12

col mining_function format a12

col algorithm format a12

col attribute_name format a20

col data_type format a20

col vector_info format a30

col attribute_type format a20

set lines 120

SELECT model_name, mining_function, algorithm,

algorithm_type, model_size

FROM user_mining_models

WHERE model_name = ‘DOC_MODEL’

ORDER BY model_name;