Some of the database components can’t be installed unless you install a pre-requisite components first prior to installing it.

Here I am listing all of them for guidance:

Some of the database components can’t be installed unless you install a pre-requisite components first prior to installing it.

Here I am listing all of them for guidance:

One of the new exciting features of Oracle 12c is the ability to restore a Table using RMAN which was not possible in previous Oracle releases.

To implement this there are pre-requisites:

Let us proceed in testing:

I will create a new dummy table based on actual table existing in the database:

SQL> create table TESTSCHEMA.DUMMY_TABLE as select * from TESTSCHEMA.EMPLOYEE_INFO;

Now check the current SCN number and use it for the command later on:

SQL> select dbms_flashback.get_system_change_number from dual;

OR use this query:

SQL> select current_scn from v$database;

OR check the SCN generated based on the tablespace backup:

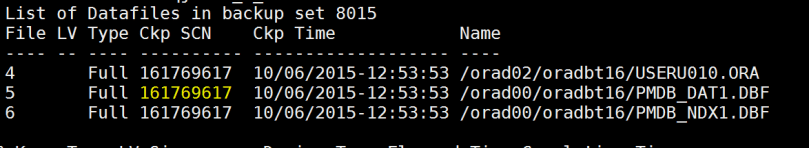

RMAN> list backup;

OR

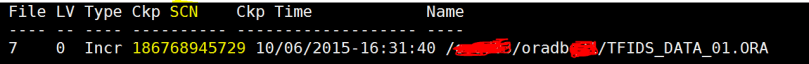

Use the following command to check the backup of the data file associated with the tablespace:

This query will report the FILE_ID number that can be used with the below RMAN command to get the SCN for the tablespace:

select TABLESPACE_NAME,FILE_ID from dba_data_files;

then,

rman target /

RMAN> LIST BACKUP OF DATAFILE 7;

***Before Dropping the table make sure you have a valid successful backup:

RMAN> backup database;

Then drop the newly created table:

SQL>Drop table TESTSCHEMA.DUMMY_TABLE;

Command to recover the table:

recover table ‘TESTSCHEMA’.’DUMMY_TABLE’ until scn 161451617 auxiliary destination ‘/oracle/app/’;

You need to wait as it will take time with many messages that is going to be generated….

Important Remark: you will notice messages about AUX Database being shutdown and startup, DON’T PANIC (this is not the database you are working on it, from behind the scene an auxiliary database is being created while table restoration is taking place).

You can safely remove the files generated under the “auxiliary destination” directory.

****The following are another alternatives for the same action:

A. You can also recover the table to be exported as a dump file (where you can import the table later on ) :

recover table ‘ TESTSCHEMA ‘. ‘DUMMY_TABLE’ until scn 161773617 auxiliary destination ‘/oracle/app/’ DATAPUMP DESTINATION ‘/oracle/export/’ DUMP FILE ‘table_recovery_dump.dmp’ notableimport;

B. You can recover the table to be renamed to a different name while recovered:

recover table ‘TESTSCHEMA’.’DUMMY_TABLE’ until scn 161773617 auxiliary destination ‘/oracle/app/’ remap table TESTSCHEMA.DUMMY_TABLE:DUMMY_TABLE_TEMP;

Here I am exploring two new Oracle datapump parameters that have been introduced in Oracle 12cR1 (12.1.0.2). They are: views_as_tables & logtime.

views_as_tables : will allow you to export the specified schema views as tables.

Logtime: will provide timestamp for each operation being performed while pump operations is running (in my opinion: very useful).

Export datapump command using both parameters example:

nohup $ORACLE_HOME/bin/expdp “‘/as sysdba ‘” DIRECTORY=PUMP_DIR DUMPFILE=test_view_as_table.dmp views_as_tables=SM_DEV.ANAL_COMP_VIEW logfile=export_table_as_view.log logtime=all &

***** If you face the below error while performing expdp with “logtime” parameter:

UDE-31623: operation generated ORACLE error 31623

ORA-31623: a job is not attached to this session via the specified handle

ORA-06512: at “SYS.DBMS_DATAPUMP”, line 3905

ORA-06512: at “SYS.DBMS_DATAPUMP”, line 5203

ORA-06512: at line 1

***** To resolve this problem I have checked the following:

1.I have checked that the streams parameter and it was beyond 40M which is OK.

select * from dba_registry;

3. The final resolution was to unset NLS_LANG environment variable.

Which solved my error problems!!!

To return back to our initial thread, When you check the content of the log file the time stamps are included which is useful to check the process that is consuming more time.

here is an example of export logfile with time stamp dates added: